| Minecraft mods that I use in 2024 | Created: 21.04.2024 17:51 Last modified: 05.05.2024 09:10 |

|

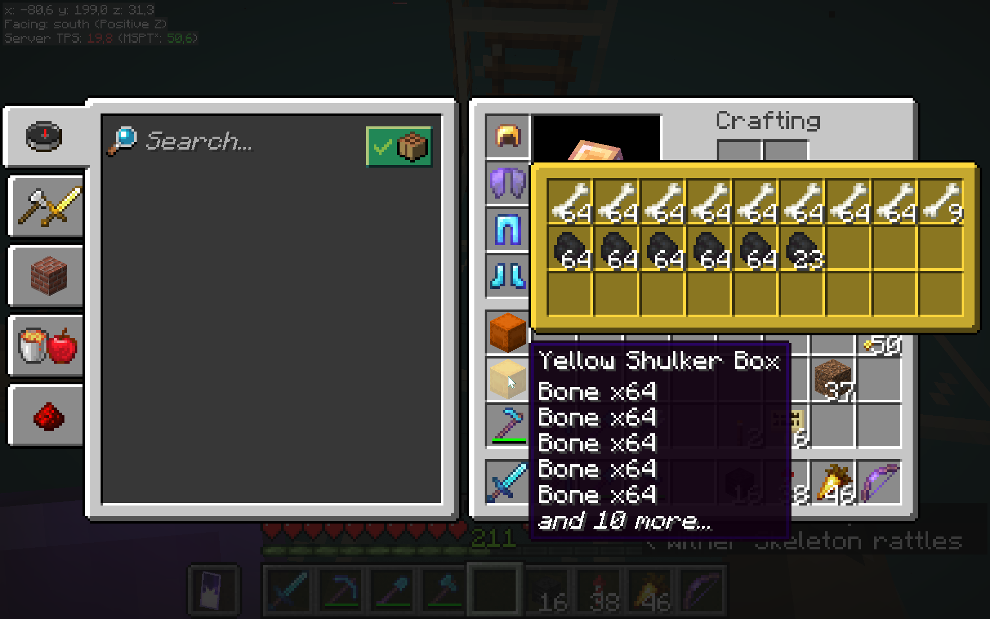

This is an update of the "mods" section of the older article called "Minecraft Link collection". Since then, there have been a few changes in both Minecraft and Mods, so I consider it useful to post an update. Many things are unchanged too, but I think it's really easier to read if I copy them here verbatim, instead of pointing at the older article. As Minecraft is not really designed to be modded (other than by resource packs, which have extremely limited capabilities), and thus does not have an even remotely stable modding API, mods are always highly version specific. The following list of mods therefore probably does not apply for anything other than version 1.20.4 that I used at the time of writing this. Mods that are not under constant, and very work intensive, development will cease to work abruptly with a seemingly minor update, because some internals changed and the mod probably has to be rewritten from scratch. In general, it's an absolute mess. ModloaderAll the mods that follow are Fabric mods, meaning they require the modloader called Fabric. Fabric itself does not change the game at all - it just adds the hooks that are necessary to then be able to mod aspects of the game.For installing fabric, I recommend either looking at their documentation over on FabricMC.net, or using the opportunity to throw away the not-so-great Vanilla Launcher coming with Minecraft (if you didn't install it from the Windows Store, which is 100 times worse) alltogether. Instead, you could use the excellent Prism Launcher from prismlauncher.org that simply allows you to select Fabric as an option when installing a new Minecraft version. And it's also great for comfortably installing mods from the two biggest websites for mods, Modrinth and Curseforge. Those are also the websites where you'll find all of these mods for manual download it you're not using the prism launcher. Mods that I currently useThese are the mods that I currently use (though not necessarily all the time and at the same time). Most are client-side mods and work even with unmodded servers, some are (also) server-side mods. Some of the client-side mods might be considered a bit cheaty by some, so you probably shouldn't use them on multiplayer-servers you don't control.MiniHUDMiniHUD is a generally useful tool. Its main function is to show selected parts of the data that you could see on the F3 debug screen in the top left corner. This allows you to e.g. display your coordinates in just one line in the top left corner, you don't need to have the F3 debug screen open that would normally block your whole view.It also has a few other handy features. For example, it can show you the contents of shulker boxes in a proper graphical fashion when you mouse-over, instead of the textual description you get with vanilla. This is a big quality-of-life improvement, and it is completely beyond me why this isn't a feature in vanilla - as the textual description of vanilla feels totally out of place and inconsistent with the rest of the user interface. MiniHUD can also overlay graphical markers over the world. This can be explained with an example: Say you want to build some AFK-mob-farm. As mobs can only exist in an area of 128 blocks around you (as soon as they leave that area they instantly despawn), your AFK-position needs to be chosen in a way that the whole spawn platform is within that range, but ideally not much else is. For that MiniHUD can highlight a 128 block wide sphere around a certain position. There is a screenshot of that below. When spawn-proofing an area in the overworld, you usually want to know when there is enough light so that no monsters can spawn. MiniHUD also has your back on that: It can display the light level on blocks as an overlay, i.e. every block in your world around you has the light level written on it (as a number), and that number turns green if the light level is above 0 (because then most hostile mobs are not able to spawn). Again, of course you can get that same info from the F3 debug screen, but it's an absolute PITA to use for a larger area, so usually what you do without MiniHUD is that you simply massively torch-spam everything, placing wayyyyy to many torches. This feature is also great if you want to create somewhat moody lighting, without creating an accidental mobfarm. LitematicaLitematica adds schematic support to Minecraft. For those that know Factorio, it works pretty much exactly like the blueprints there. For example, when you're designing a new build or farm, you usually don't want to do that in survival. You experiment in creative mode first, and only after a few iterations, once it looks or works as you want it to, you build the final version in your survival world. A lot of people do that by taking lots of screenshots, and I've done that too in the past, but it's just so painful. What Litematica allows you to do instead is to copy a schematic of your build in the creative world, and paste it into your survival-world as a ghost. Now of course (without enabling cheats) your build isn't really in the survival world, but a translucent image of it will be rendered, so that you know exactly what blocks you have to place where. In addition, Litematica can also give you a material list, telling you exactly how many blocks of what type are still missing to finish your build. And last but not least, it will mark build errors, e.g. if you place down a wrong block, it will be colored red.If you have cheats enabled, or if you play on a server where you have mod rights, there is also a mode that will allow you to properly paste the schematic into the world. This will be done by executing a ton of setblock-commands and it might take some time. TweakarooTweakaroo adds a ton of "Tweaks", small features that can massively improve your quality of life especially if you're (like me) into technical Minecraft. And while using these features is widely accepted on servers oriented towards technical Minecraft, they will almost certainly be considered cheating on more survival-oriented servers - you'll understand why in a second.So what are some of these tweaks? There are dozens, so I'll just pick three that I think are useful. Lets start with absolute favorite feature, the "Free Camera"-mode. This allows you to jump into an emulated "spectator mode" without really changing the gamemode. You'll still be in survival, but can move around with the camera relatively freely (you're of course still limited to the blocks loaded around you). This is immensely helpful both for building a farm, because you can look at parts of your farm that aren't easily accessible, but even more so for debugging it: Imagine for example standing on the AFK-spot of a gold farm on the nether roof, with the mobs spawning somewhere on the spawning platforms below the nether roof. With the free cam mode, you can actually look at the spawns on the spawning platforms, something that would not be possible by switching to proper spectator mode, because the moment you switch gamemode, mobs stop spawning. However, this example also makes it obvious why that would be considered cheating on most survival oriented servers, as this is pretty much a wallhack. Another tweak is an autoclicker with a configurable click interval. Boring, but still helpful. And last but not least there is the automatic tool switching: It can automatically switch out your tool when the one you're currently using runs out of durability. Imagine for example a long mining session, where you dig up tons of stone. With this tweak, it'll automatically switch to another pickaxe (that must be in your inventory, it doesn't make them magically appear) when the durability of the one you're currently using runs out. Sodium / IrisShadersSodium completely replaces the Minecraft Rendering Engine by a better one. Where "better" means both faster and better looking. And faster does not mean a few percent faster, it means faster by a factor. For me, FPS increased from 2 to 20 with a 512x (ultra-high-res) texture-pack in the most crammed part of the main base. This mod really made me wonder what Mojang does with all their money - as this mod is almost completely made by just ONE person. If ONE can do THAT without even having access to the sourcecode - why can't Mojang?!IrisShaders builds on Top of Sodium to provide shaders. If you have no idea what shaders are, these provide a very thick extra layer of eyecandy with things like moving leaves, reflections in the water, real shadows, and much more. If you have a highend graphics card and want it to really sweat while you're playing Minecraft, then shaders are for you. Because Sodium is relatively new, there currently aren't any shaders developed for it - this is where IrisShaders comes into the picture: It allows loading the shaderpacks developed for another popular mod (Optifine). Speaking of shaders: My favorite shaders are a modified version of BSL called Complementary Shaders - but with settings that heavily deviate from the defaults (it is very configurable). NvidiumThis mod requires a somewhat current (20xx and newer) GPU made by NVIDIA. As it uses a somewhat exotic OpenGL feature that (at least currently) only NVIDIAs GPU-drivers support, it sadly will not work at all with GPUs from other manufacturers. However, if you happen to have one of those massively overpriced NVIDIA cards in your machine, this mod is going to be (minecraft-)life-changing.The killer feature in this is the "unlimited render distance". You read that right. You can set render distance not only to 32 chunks (and the machine you would need for running that in vanilla has not been invented yet), but to "unlimited", which essentially means "until the GPU runs out of memory after hundreds of chunks". The way this works is that as you walk around the world, and chunks that you left are unloaded, they are not removed from what the GPU is rendering. Minecraft no longer knows these chunks are there, so the painfully slow Java code does not process them any longer, but the GPU does - and that does not seem to cause it any sweat. Now you very obviously will not see any changes in these chunks (until you go there again), but they are still displayed. So after a while of walking or flying around in your world, it is not unusual to see blocks that are more than 1000 blocks away. It is mindblowing to still be able to see the big mountain with your main base on it while you're 3000 blocks away - at usually way above 100 fps. Note that this mod also depends on Sodium (see previous section), but is incompatible with IrisShaders. You can have both installed at the same time, they behave well with each other, but you cannot use them at the same time. The moment you turn on shaders, Nvidium will automatically turn itself off. Lithium

These two mods are made by the same person that made Sodium, JellySquid, and they're just as mind-boggling. I honestly cannot fathom why Mojang hasn't yet hired them. |

|

|

no comments yet write a new comment:

|

|